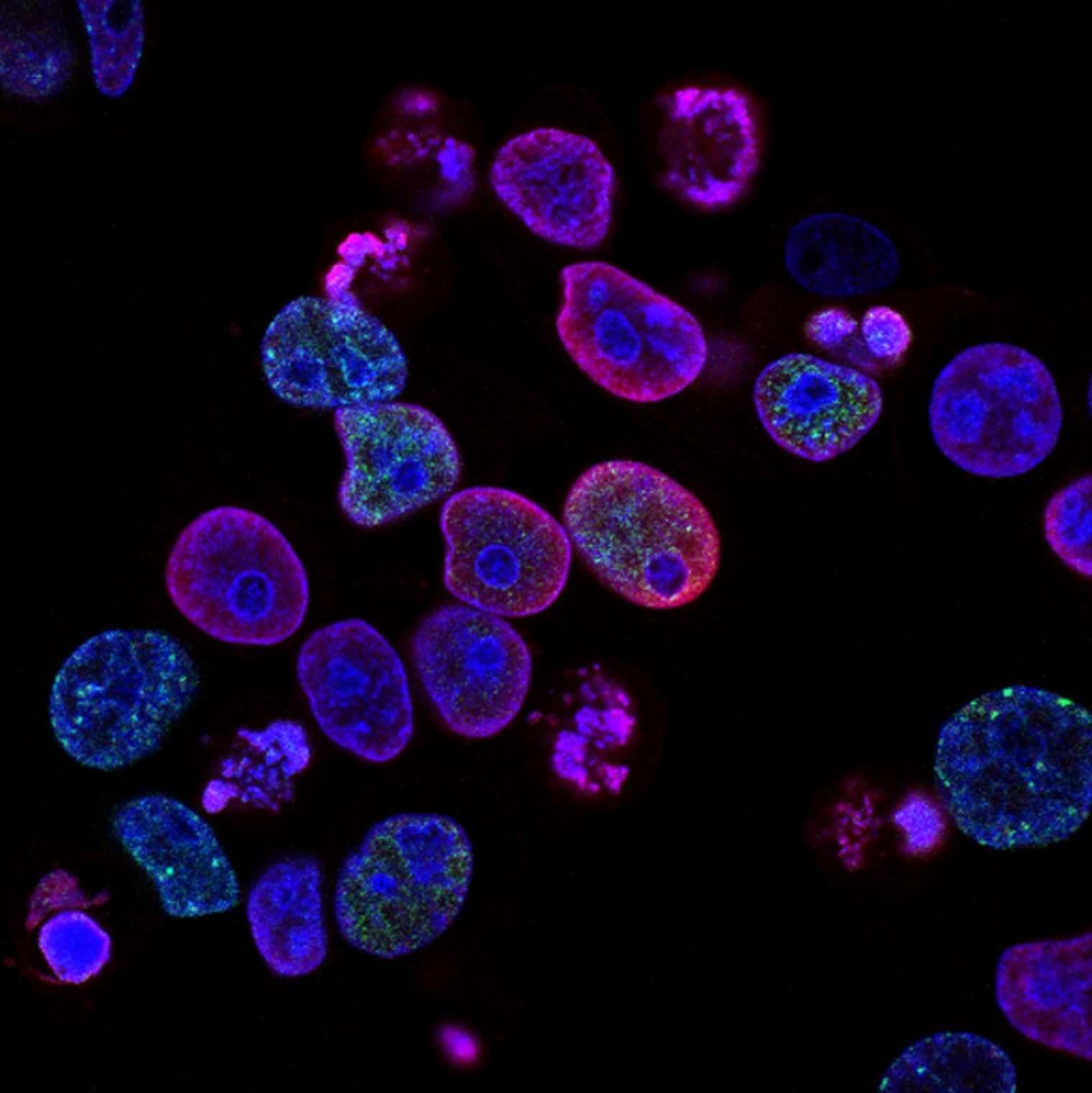

In the Represent theme, we develop AI and ML methods that learn unified, multimodal representations of biological systems, integrating diverse data types- from single-cell and spatial transcriptomics and proteomics to histopathology. These multimodal representations capture heterogeneous cellular states, tissue architecture, and cross-modal interactions, providing a structured view of complex biological variation.

Key projects under this theme include:

SNSF-funded project: Learning context-aware, interpretable representations of the tumor microenvironment to enable AI-driven precision oncology, which focuses on capturing the spatial and cellular context of tumors to guide precision treatment strategies. See more

Chan Zuckerberg Initiative-funded project: Towards a Unified Atlas of Spatial Proteomics Powered by Generative AI, aimed at creating a comprehensive map of tissue proteomics by integrating spatial and molecular data using generative AI methods.

Swiss Cancer Research funded project, AI-powered precision cancer therapy for mesothelioma, in collaboration with the Brbic lab (EPFL), the Homicsko lab (CHUV) and Prof. Berezowska (Institut universitaire de Pathologie, CHUV), focuses on developing AI models to guide personalized treatment strategies for mesothelioma patients.

Key publications:

VirtualMultiplexer: a generative AI framework that translates real H&E images to matching immunohistochemistry (IHC) images for several protein markers, accelerating histopathology workflows. Paper Code

MatchCLOT: A contrastive learning framework that aligns single-cell profiles across modalities. Paper Code

By learning joint multimodal representations, we aim to reveal latent biological programs, map cellular and tissue heterogeneity, and provide interpretable embeddings that guide both fundamental discovery and therapeutic prioritization.

- (opens in a new window)

- Next

- Previous