In the Interpret theme, we develop AI methods that provide explainable and biologically meaningful insights from complex biomedical data. Our goal is to uncover the mechanisms and spatial patterns underlying AI model predictions, linking computational outputs to disease biology, tissue organisation, and potential therapeutic targets.

Key projects under this theme include:

Owkin-funded project: An Interpretable Graph Representation Learning Framework from MOSAIC Spatial Omics Data, in collaboration with the Gottardo lab and Homicsko lab (CHUV), which aims to model the spatial and functional relationships between cells in tissues while ensuring interpretability.

Swiss Cancer Research funded project: AI-powered precision cancer therapy for mesothelioma, in collaboration with the Brbic lab (EPFL), the Homicsko lab (CHUV) and Prof. Berezowska (Institut universitaire de Pathologie, CHUV), focused on developing interpretable AI models that guide personalised treatment strategies for mesothelioma patients.

Key publications:

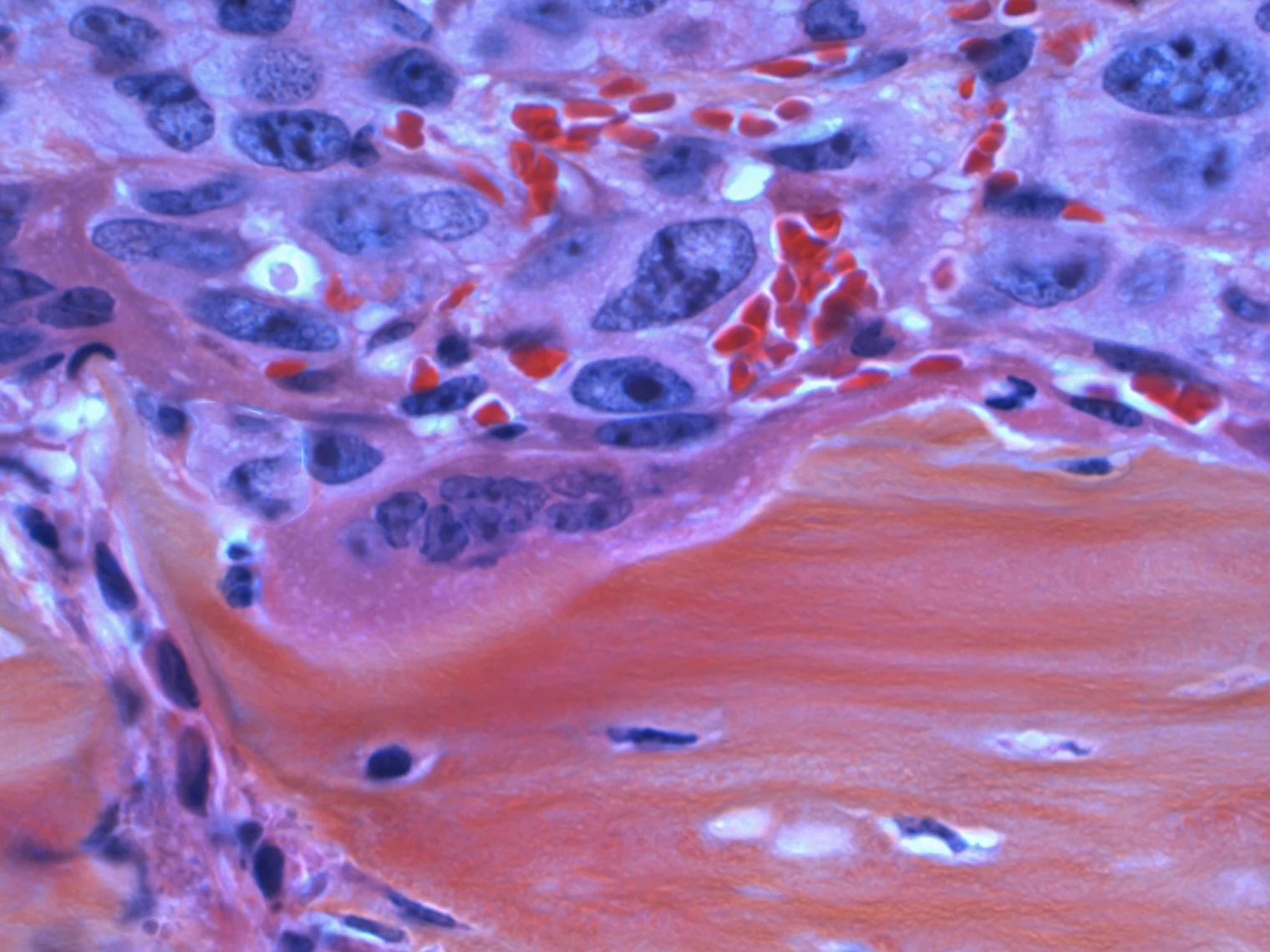

ATHENA: A framework for interpretable spatial analysis of tumor microenvironments, revealing heterogeneity metrics, cellular interactions and spatial biomarkers that drive disease outcomes. PaperCode

ProteinPNet: An inherently interpretable concept learning framework based on prototypical part networks that directly learns discriminative, interpretable spatial prototypes from spatial proteomics data. PaperCode

Through interpretable modeling, we aim to link AI predictions to biological mechanisms, identify spatial and molecular biomarkers, and support translational discoveries that inform precicion medicine.

- (opens in a new window)

- Next

- Previous